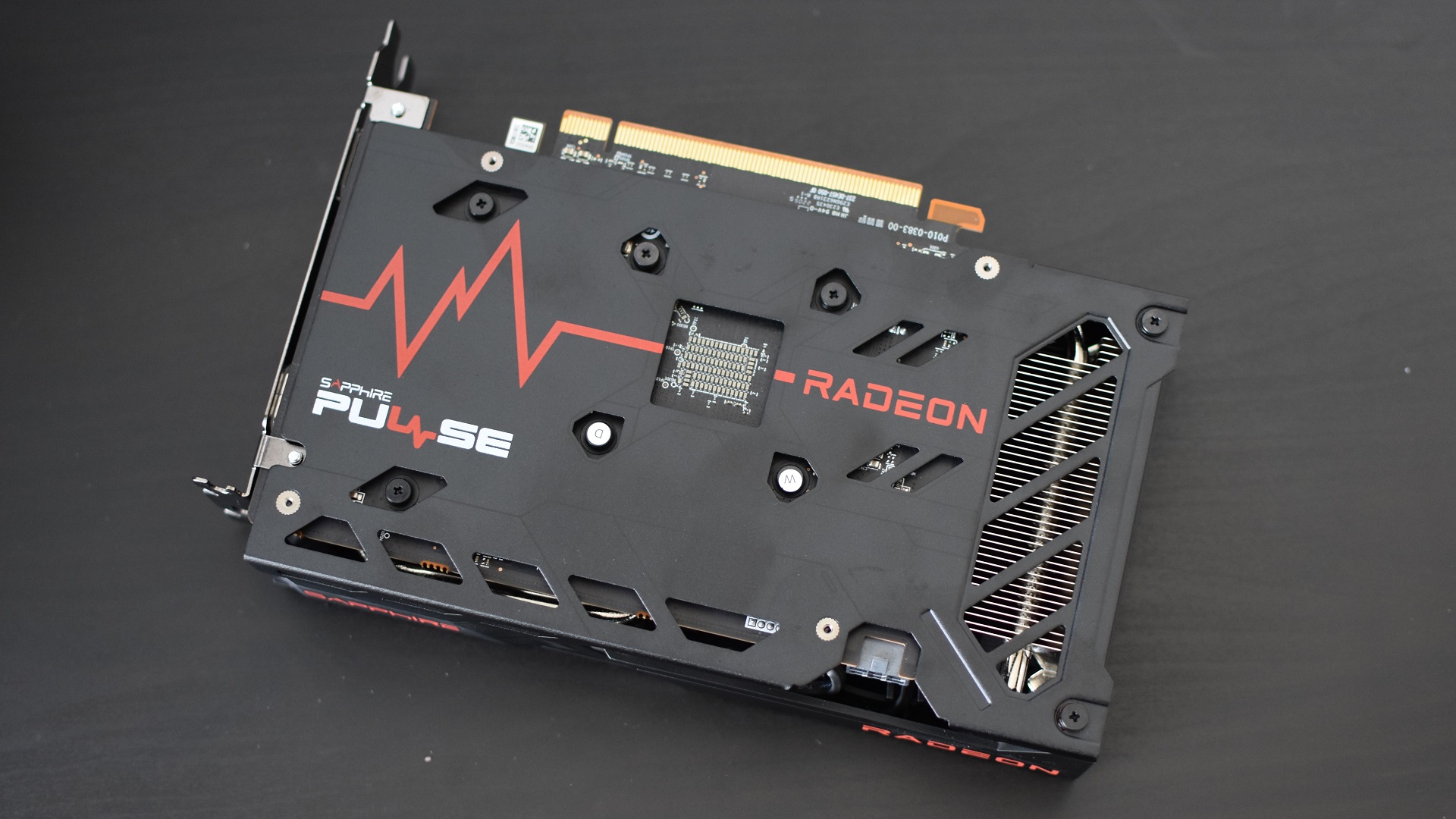

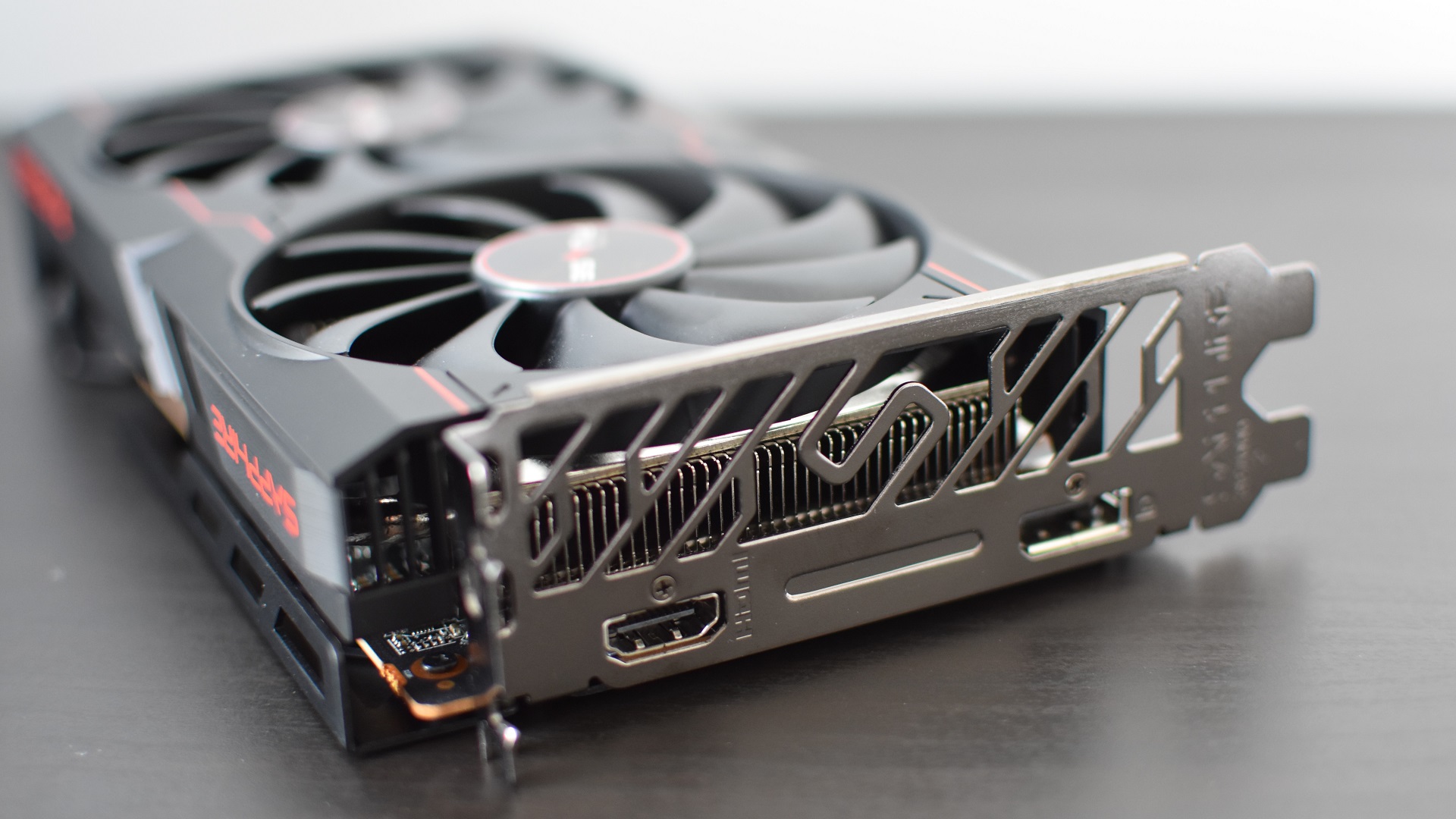

Alas, the RX 6500 XT isn’t just an inferior low-end option to the Nvidia GeForce RTX 3050 (one of the best graphics cards, and perhaps the outright best for budget builds right now). It’s an offputtingly slow, sometimes bizarrely-designed GPU in general; instead of providing long-overdue relief to a truly fudged hardware market, it ends up feeling like more an attempt to just sell any old second-rate kit to an audience that’s been left desperate for something, anything that’s new and somewhat affordable. On the model I’ve tested, the Sapphire Pulse Radeon RX 6500 XT, Sapphire have at least tried to perk up the clock speeds with factory overclocks: the maximum boost frequency is up from the stock 2815MHz to 2815MHz, while the more commonly achievable ‘Game clock’ speed is up from the stock 2610MHz to 2685MHz. They’ve also fitted a quiet yet effective cooler, which keeps the core temps from reaching above 61°c under load. Even the stock clock speeds are, in fairness, incredibly high by any GPU standards. It’s just that they’re balanced by a relatively low number of cores, 1014, as well as the piddly 4GB of GDDR6 memory. That’s half of the RTX 3050’s VRAM, and hardly puts the RX 6500 XT in good stead for games with higher-quality textures, or for forays into 1440p. AMD would likely point out that the RTX 3050 costs a fair bit more, which is true: the cheapest models I’ve found start at £299, with most landing somewhere between £300 and £400. That doesn’t change how trying to get by with 4GB – no more than the Radeon RX 550 from 2017! – is playing with fire, as far as modern hardware demands are concerned. The RX 6500 XT also doesn’t help itself by only using a PCIe 4.0 x4 interface, meaning it can only take advantage of four PCIe lanes despite fitting into an x16 (16 lanes) slot on the motherboard. The RTX 3050 doesn’t make full use of the bandwidth either, using a PCIe 4.0 x8 interface, but between this and its 8GB of VRAM it’s less susceptible to game textures getting stuck in a bottleneck. And the RX 6500 XT’s performance gets worse – potentially a lot worse – if you install it in an older system that’s still relying on PCIe 3.0. First, though, let’s see how it runs in more favourable conditions, namely our PCIe 4.0-based test rig (specs on the right). To give the RX 6500 XT some credit, it’s not incapable of smooth frame rates: at 1080p and with Ultra quality settings, Hitman 3 managed a 92fps average in its Dubai benchmark and 72fps in its Dubai benchmark. 1440p was doable here too, with 57fps in Dubai and 49fps in Dartmoor – again on Ultra settings. Forza Horizon 4’s built-in benchmark landed dead-on 60fps when running Ultra settings as well, and this only dropped to a playable 49fps at 1440p. Horizon Zero Dawn couldn’t quite make it to 60fps at 1080p/Ultimate, though its 54fps result came close. Engaging FSR, AMD’s upscaling tech, also pushed HZD’s 1440p/Ultimate average from 40fps up to 50fps. There are, however, two problems with these results. The first is that they’re uniformly lower than what the RTX 3050 produced in identical conditions, with only the Hitman 3 1080p Dubai benchmark coming close (Nvidia’s card scored 95fps). Otherwise, it’s clear which is the 1080p king, with the RTX 3050 averaging 67fps in HZD, 86fps in the Hitman 3 Dartmoor test and 119fps in Forza Horizon 4. One more frame and that’d be double the RX 6500 XT’s effort. The second problem is that results like those of Forza and Hitman 3 are in a minority: even at 1080p, most games will struggle to get over the 60fps line without demanding quality sacrifices. Shadow of the Tomb Raider is getting on a bit these days, but even this only made it to 57fps on a combination of the Highest preset and the most basic SMAA option. At 1440p, this plummeted to 34fps. And although Total War: Three Kingdoms could pump out 87fps in its Battle benchmark on Medium quality, trying to use Ultra quality sees it slump to 38fps. And again, that’s just at 1080p – try to upgrade to 1440p, and that 38fps becomes 20fps. Final Fantasy XV was another to come within grasping distance of 60fps at 1080p, but on its Highest graphics preset it could only average 50fps. This setting doesn’t include the fancy TurfEffects grass and boyband-grade HairWorks tech either: turning these on slapped it down to 33fps. Granted, the RTX 3050 only averaged 39fps with these extras, but without them could manage a visibly smoother 69fps. It was much better for 1440p too: at this resolution, the RX 6500 XT produced just 31fps without TurfEffects and HairWorks, and 23fps with them. Back on 1080p, Assassin’s Creed Odyssey was at least playable on its Ultra High settings, averaging 35fps; dropping to High quality also produced a slick 79fps. The newer, ostensibly more demanding Assassin’s Creed Valhalla actually got a slightly better 39fps on Ultra High quality, though that’s still not terribly impressive for a new graphics card in 2022. Switching to High offered smaller gains than Odyssey too, ultimately averaging a so-so 43fps. The RX 6500 XT put Metro Exodus in similar straits, averaging 39fps on Ultra settings and needing a drop down to Normal for 70fps. Trying to run Ultra settings at 1440p also produced the GPU’s worst showing yet, sputtering out just 10fps: a fraction of the RTX 3050’s 37fps. Next to struggle was Watch Dogs Legion, which at 1080p could manage 59fps on High, but only 30fps on Ultra. Make that 22fps for Ultra on 1440p, as well. Elden Ring did close the gap between the RX 6500 XT and the RTX 3050, somewhat: the AMD card’s 50fps at 1080p/Maximum wasn’t painfully far behind the Nvidia GPU’s 56fps. That’s still more than a 10% advantage in the RTX 3050’s favour, mind, and the RX 6500 XT’s 1440p/Maximum result of 37fps wasn’t as smooth as the RTX 3050’s 42fps. If you were being profoundly generous, you could say the RX 6500 XT never technically fails to reach playable performance at max-quality 1080p – but whether you’re spending £200 on a new GPU or £1200, I don’t think “just above 30fps” is the level you should aspire to. Even the comparatively geriatric GeForce GTX 1060 can more outpace this card at 1080p, and the GTX 1660 will downright embarrass it – the latter being available for about £260. What’s more, one of the RX 6500 XT’s supposed upgrades on the RX 5500 XT – ray tracing support – hurts more than it helps. There’s simply not enough power here to handle the extra strain of all those realistic lighting and shadow effects: adding Ultra-quality ray traced shadows to Shadow of the Tomb Raider, at 1080p with the Highest preset, dragged its original 57fps average down to an untenable 20fps. It took dropping to Medium quality, plus Medium ray tracing, to simply climb back up to 37fps. Metro Exodus couldn’t cope with the rays either, collapsing to 13fps after adding Ultra-quality RT effects to the general Ultra preset. Lowering the non-ray tracing settings to make up the difference will an option in any RT-supportive game, but then doing so makes the game uglier, defeating the point of enabling these effects in the first place. The RTX 3050 also feels the weight of ray tracing, and to be sure, could only really handle them in my testing with the aid of DLSS upscaling. But that’s something else the RX 6500 XT doesn’t have, and even if FSR was supported in Metro Exodus (it isn’t), its simple spatial upscaling rarely looks as sharp and clean as the AI-powered DLSS. Radeon Super Resolution is an alternative, though it doesn’t really do anything that Nvidia Image Scaling doesn’t for GeForce cards. And although FSR is supported in some games that don’t also support DLSS, that’s not even a reason to choose an AMD graphics card, because FSR works fine on Nvidia hardware too. This all essentially leaves the RX 6500 XT’s lower price as its main appealing quality. I get it – times are tough for PC build prices, and the extra £100 that an RTX 3050 would cost could easily go towards a more spacious SSD or one of the best CPUs. But if you’re looking to upgrade an existing system, one in which the CPU/motherboard combination still only supports PCIe 3.0, you won’t be getting your money’s worth. After tinkering with my test PC’s BIOS to use PCIe 3.0 instead, I re-ran a few games benchmarks, and found an often nasty downgrade from PCIe 4.0. Metro Exodus was the only one to get away practically unscathed, averaging 37fps at 1080p/Ultra – just a 1fps drop. Horizon Zero Dawn, on the other hand, stumbled from 54fps on 1080p/Ultimate to 46fps, a noticeable drop in smoothness. Watch Dogs Legion, already at the precipice of unplayability at 1080p/Ultra, sank from 30fps with PCIe 4.0 to just 24fps with PCIe 3.0. Shadow of the Tomb Raider, on 1080p/Ultimate/SMAA, dropped from 57fps to 49fps, with Assassin’s Creed Valhalla getting the worst of it: its 39fps average on Ultra High quality got chopped in half to 19fps. Even very powerful graphics cards can lose 1-5% of their frame rates when using PCIe 3.0’s much lower bandwidth, but it’s usually nothing like the huge drops we can see here. It’s the RX 6500 XT’s unique combination of meagre VRAM and minimal PCIe lane usage that gets it in trouble: with so little memory to work with, the PCIe bus needs to take up the slack, but even on a 4.0 interface that’s lacking in bandwidth too. Apply the same pressure to a naturally slower PCIe 3.0 interface, and performance suffers even more. You could maybe claw a few frames back by pairing the RX 6500 XT with a good AMD Ryzen CPU, like the Ryzen 5 5600X: this would enable RDNA 2’s Smart Access Memory feature for better VRAM performance. But SAM’s impact varies by games, and in any case, it wouldn’t be enough to make up a serious PCIe 3.0-induced dip like that of Valhalla – nor would it put this card on equal terms with the RTX 3050. I know, GPU prices suck and have done for years, but you can do better than the RX 6500 XT even if it means trawling the used market.